Understand and Setup Vocabularies

What are vocabularies? What are vocabularies used for?#

https://www.w3.org/standards/semanticweb/ontology

What are vocabularies used in piveau?#

There are several use cases for vocabularies in piveau. In the following, we go through them one by one.

The RDF schema used in piveau normally recommends to use a vocabulary for a certain RDF property. One example would be the dcat:theme, it uses the eu data-theme vocabulary. The following code snippet, provides a very simple example for linking to a vocable described in the data-theme vocabulary.

@prefix dcat: <http://www.w3.org/ns/dcat#> .

<http://data.europa.eu/88u/dataset/simple-dataset> dcat:theme <http://publications.europa.eu/resource/authority/data-theme/AGRI> .

No more information is needed for this property as all information are already described in the vocabulary. The advantage? The vocabulary is standardized and information for those properties are reusable. Besides that, a user may want to retrieve information of a property which follows the vocabulary scheme.

PREFIX dcat: <http://www.w3.org/ns/dcat#>

PREFIX dct: <http://purl.org/dc/terms/>

PREFIX skos: <http://www.w3.org/2004/02/skos/core#>

SELECT DISTINCT ?theme ?prefLabel

WHERE {

<http://data.europa.eu/88u/dataset/simple-dataset> dcat:theme ?theme .

?theme skos:prefLabel ?prefLabel .

}

In the above example, the theme of datasets is retrieved and the correspond preferred lexical label for that theme is retrieved.

Note: The triplestore in piveau is not able to access external resources (or resolve them). For this, the graph of the vocabulary must be stored in the triplestore in piveau is using.

Another usage is in the data provider interface. Staying with our example from above a user may want to add a data-theme to a new dataset. Since resource URIs are not very user-friendly, they are resolved and presented in a readable form.

Beyond that vocabularies are also used in the search service. Properties that use a vocabulary are resolved before indexing so that the vocabulary information can be used to increase that a dataset can be found.

Which services are involved?#

For harvesting:

- piveau-consus-importing-euvoc

- piveau-consus-importing-piveauvoc

- piveau-consus-exporting-voc

For storing and access:

- piveau-hub-repo

- piveau-hub-search

How the harvesting process works?#

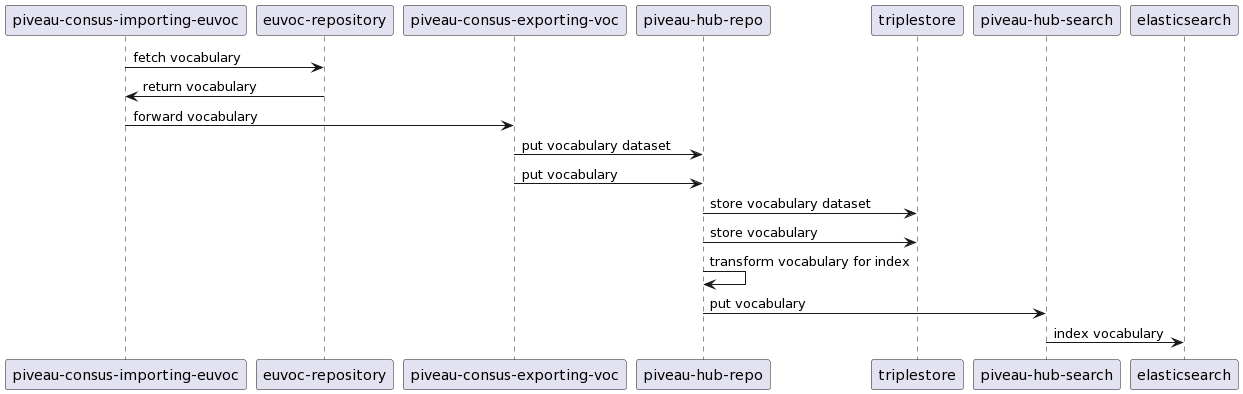

Standard process:

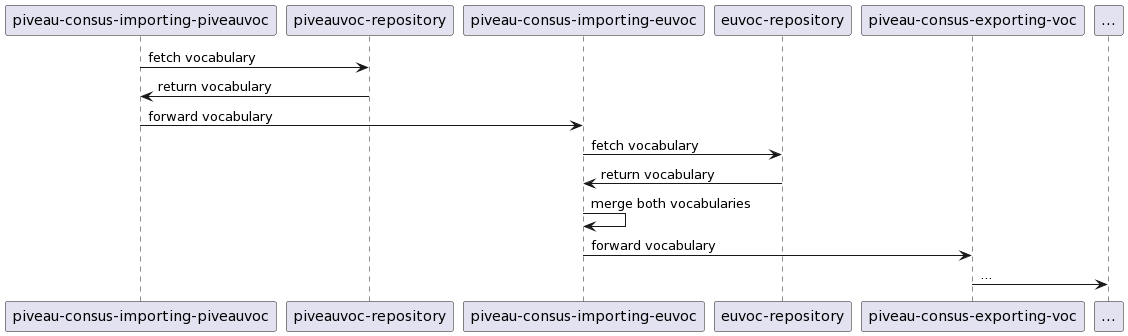

Combine both importer:

How are vocabularies organized?#

For hub-repo vocabularies are organized using a catalogue. Each dataset stores metadata for a corresponding vocabulary. Importantly, the hash of a vocabulary and its URI are stored in a vocabulary dataset. The access URL of the corresponding distribution links to the vocabulary graph itself, it stores the vocabulary URI. The hash is stored as the dataset's identifier (dct:identifier). Each time a vocabulary is imported the hash is compared to prevent unnecessary forwarding. As the vocabularies catalogue is only used for internal organization, it is declared as hidden. Thus, excluding the catalogue and the metadata vocabulary datasets from indexing. However, the vocabularies itself are indexed and therefore forwarded to hub-search. The search service organizes each vocabulary in a separate index with equal mapping. Each vocabulary index follows a "vocabulary_*"-naming and each vocable is a searchable instance of that index. Whenever a vocabulary is created or updated in hub-repo/triplestore each vocable is converted to suitable JSON that fit the vocabulary mapping. The vocables are then all together packed and forwarded to hub-search.

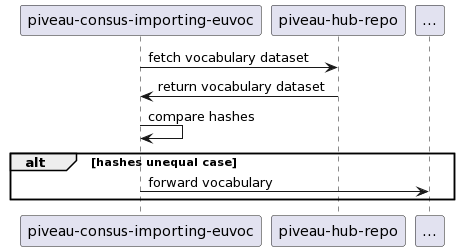

Importers checking if update is necessary before forwarding:

Hands on locally#

Create ./pipes folder. Create a vocabulary pipe under in the ./pipes folder, e.g. with filename vocabularies-political-geocoding-level.json:

{

"header": {

"context": "DEU",

"version": "2.0.0",

"transport": "payload",

"id": "44c56ef6-1f73-45bf-a7e4-f56f66f905b5",

"name": "vocabularies-political-geocoding-level",

"title": "Harvesting Vocabulary - political-geocoding-level"

},

"body": {

"segments": [

{

"header": {

"name": "piveau-consus-importing-euvoc",

"segmentNumber": 1,

"title": "Importing DCAT-AP.de Vocabulary",

"processed": false

},

"body": {

"config": {

"address": "https://www.dcat-ap.de/def/%s",

"resources": [

{

"id": "political-geocoding-level",

"fileName": "politicalGeocoding/Level/1_0.rdf",

"targetGraph": "http://dcat-ap.de/def/politicalGeocoding/Level",

"dataset": {

"title": "Political geocoding level",

"description": "DCAT-AP.de related vocabulary for political geocoding level",

"distribution": {

"description": "SKOS Concept Scheme"

}

}

}

]

}

}

},

{

"header": {

"name": "piveau-consus-exporting-voc",

"segmentNumber": 2,

"title": "Exporting EU Vocabulary",

"processed": false

},

"body": {}

}

]

}

}

Create a docker-compose.yaml. With following content:

version: '3'

services:

piveau-consus-importing-euvoc:

image: registry.gitlab.com/piveau/consus/piveau-consus-importing-euvoc:latest

container_name: piveau-consus-importing-euvoc

logging:

options:

max-size: "50m"

ports:

- '8090:8080'

networks:

- piveau-voc

piveau-consus-exporting-voc:

image: registry.gitlab.com/piveau/consus/piveau-consus-exporting-voc:latest

container_name: piveau-consus-exporting-voc

logging:

options:

max-size: "50m"

ports:

- '8091:8080'

environment:

- PIVEAU_HUB_ADDRESS=http://piveau-hub-repo:8080

- PIVEAU_HUB_APIKEY=yourRepoApiKey

networks:

- piveau-voc

piveau-consus-scheduling:

image: registry.gitlab.com/piveau/consus/piveau-consus-scheduling:latest

container_name: piveau-consus-scheduling

logging:

options:

max-size: "50m"

ports:

- '8092:8080'

- '8093:8085'

environment:

- PIVEAU_SHELL_CONFIG={"http":{"host":"0.0.0.0","port":8085}}

volumes:

- ./pipes:/usr/verticles/pipes

networks:

- piveau-voc

piveau-hub-repo:

image: registry.gitlab.com/piveau/hub/piveau-hub-repo:latest

container_name: piveau-hub-repo

logging:

options:

max-size: "50m"

ports:

- 8081:8080

- 8082:8085

environment:

- PIVEAU_HUB_SEARCH_SERVICE={"enabled":true,"url":"piveau-hub-search","port":8080,"api_key":"yourSearchApiKey"}

- PIVEAU_HUB_API_KEY=yourRepoApiKey

- PIVEAU_HUB_SHELL_CONFIG={"http":{},"telnet":{}}

- PIVEAU_TRIPLESTORE_CONFIG={"address":"http://virtuoso:8890","clearGeoDataCatalogues":["*"]}

- JAVA_OPTS=-Xms300m -Xmx1g

networks:

- piveau-voc

piveau-hub-search:

image: registry.gitlab.com/piveau/hub/piveau-hub-search:latest

container_name: piveau-hub-search

logging:

options:

max-size: "50m"

ports:

- 8083:8080

- 8084:8081

depends_on:

- elasticsearch

environment:

- PIVEAU_HUB_SEARCH_API_KEY=yourSearchApiKey

- PIVEAU_HUB_SEARCH_ES_CONFIG={"host":"elasticsearch","port":9200}

- PIVEAU_HUB_SEARCH_GAZETTEER_CONFIG={"url":"http://doesnotmatter.eu"}

- JAVA_OPTS=-Xms300m -Xmx1g

networks:

- piveau-voc

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:7.17.2

container_name: elasticsearch

logging:

options:

max-size: "50m"

ulimits:

memlock:

soft: -1

hard: -1

ports:

- 9200:9200

- 9300:9300

environment:

- bootstrap.memory_lock=true

- discovery.type=single-node

- xpack.security.enabled=false

- ES_JAVA_OPTS=-Xms2G -Xmx4G

networks:

- piveau-voc

virtuoso:

image: openlink/virtuoso-opensource-7

container_name: virtuoso

logging:

options:

max-size: "50m"

ports:

- 8890:8890

- 1111:1111

environment:

- DBA_PASSWORD=dba

- VIRT_PARAMETERS_NUMBEROFBUFFERS=170000

- VIRT_PARAMETERS_MAXDIRTYBUFFERS=130000

- VIRT_PARAMETERS_SERVERTHREADS=100

- VIRT_HTTPSERVER_SERVERTHREADS=100

- VIRT_HTTPSERVER_MAXCLIENTCONNECTIONS=100

networks:

- piveau-voc

networks:

piveau-voc:

Bring up the network:

Wait until everything is up and running.

Add the vocabularies catalogue:

curl --location --request PUT 'http://localhost:8081/catalogues/vocabularies' \

--header 'x-api-key: yourRepoApiKey' \

--header 'Content-Type: application/rdf+xml' \

--data-raw '<rdf:RDF

xmlns:dct="http://purl.org/dc/terms/"

xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#"

xmlns:dcat="http://www.w3.org/ns/dcat#"

xmlns:edp="https://europeandataportal.eu/voc#"

xmlns:foaf="http://xmlns.com/foaf/0.1/">

<dcat:Catalog rdf:about="https://europeandataportal.eu/id/catalogue/vocabularies">

<dct:publisher>

<foaf:Agent>

<foaf:name xml:lang="en">Data Europa EU</foaf:name>

<dct:type rdf:resource="http://purl.org/adms/publishertype/NationalAuthority"/>

</foaf:Agent>

</dct:publisher>

<dct:description xml:lang="en">DCAT-AP related Vocabularies</dct:description>

<dct:title xml:lang="en">Vocabularies</dct:title>

<edp:visibility rdf:resource="https://europeandataportal.eu/voc#hidden"/>

<dct:language rdf:resource="http://publications.europa.eu/resource/authority/language/ENG"/>

<dct:type>dcat-ap</dct:type>

</dcat:Catalog>

</rdf:RDF>'

Launch pipe via http://localhost:8093/shell.html

Check:

* [http://localhost:8081/vocabularies/political-geocoding-level](http://localhost:8081/vocabularies/political-geocoding-level)

* [http://localhost:8083/vocabularies/political-geocoding-level](http://localhost:8083/vocabularies/political-geocoding-level)

Non-harvested vocabularies#

In piveau, there are some vocabularies that are not harvested through a pipe. However, those vocabularies can be imported via the search CLI.

Afterwards, you can check:

* [http://localhost:8083/vocabularies/spdx-checksum-algorithm](http://localhost:8083/vocabularies/spdx-checksum-algorithm)

* [http://localhost:8083/vocabularies/iana-media-types](http://localhost:8083/vocabularies/iana-media-types)

Vocabulary enrichment in hub-search#

Vocabulary usage is enriched during indexing in hub-repo or in hub-search. Enrichment in hub-repo is based on local files and in hub-search on the indexed vocabulary, which can be updated periodically during harvesting. Future work should perform vocabulary enrichment only in hub-search. In the following example, the dataset type vocabulary is used to describe a simple dataset as test data:

@prefix dcat: <http://www.w3.org/ns/dcat#> .

@prefix dct: <http://purl.org/dc/terms/> .

<https://piveau.io/set/data/simple-dataset>

a dcat:Dataset ;

dct:type <http://publications.europa.eu/resource/authority/dataset-type/TEST_DATA> .

During enrichment, the dataset can be enriched with a readable and also searchable label:

{

"id": "simple-dataset",

"type": {

"id": "TEST_DATA",

"label": "Test data",

"resource": "http://publications.europa.eu/resource/authority/dataset-type/TEST_DATA"

}

}

To achieve such enrichment, hub-search must be configured in the vocabulary object under the Elasticsearch configuration element:

{

...

"PIVEAU_HUB_SEARCH_ES_CONFIG": {

...

"vocabulary": {

"dataset-type": {

"fields": [

"type"

],

"excludes": [

"distributions"

],

"replacements": [

"id:id",

"label:pref_label.en",

"resource:resource"

]

}

}

}

}

Now we go through all the elements. The key of each object of a sub-element of the vocabulary element must have the ID of the vocabulary to be enriched. In this case "dataset-type" for the vocabulary "dataset type". The fields element defines all fields to be enriched, in this case only the "type" field. Since the "type" field also occurs in the "distributions" path, we want to exclude it from enrichment, since "distributions.type" uses a different vocabulary. Similarly, include paths can be defined using the "includes" element. The replacements element defines which subfield must be replaced by which information from the vocabulary. On the left side is the subfield, and after the colon the field in the vocabulary is specified. In this example, "type.id" is filled with the ID of the vocabulary, "type.label" is filled with the English preferred label, and "type.resource" is filled with the resource URI.

Future work will need to index additional information for specific vocabularies, such as "corporate-bodies". Currently, only information that follows the same schema for all vocabularies is indexed. All vocabularies already have an extension field for additional information that can be indexed. The relevant code can be found here: