Quick Start#

Launching a basic version of Piveau is very easy and straightforward. This guide shows how to launch a minimal version of the Hub and a simple harvesting job with Consus to get some data into it. piveau uses docker as its default deployment environment and runs on most operating systems (Linux, macOS and Windows).

Run pre-build docker images#

Prerequisites#

- Git

- Docker & Docker Compose

If you are testing with Docker Desktop, you need to increase the memory limit as the default setting restricts memory resources to 7GB. To ensure system stability, please increase the memory allocation to at least 16GB.

You can do this is the Docker Desktop Settings > Resources > Memory Limit.

Hint

If you're testing piveau on an Apple Silicon mac, make sure you have Rosetta enabled.

Open a terminal and clone the Quickstart repository.

Go into the quick-start directory of the cloned repository.

Here you will find all the necessary files and directories for the next steps.

Run hub and consus#

You need two Docker Compose files, you'll find both the cloned directory. The images will be pulled from different docker hubs.

Important

On macOS you may need to change the piveau-consus-scheduling port in the docker-compose-consus.yml from '5000:5000' to '5001:5000'. macOS is using port 5000 for a system service.

You can check if all services are running, e.g. piveau-hup-repo at http://localhost:8081. You should see a webpage that describes the piveau hub-repo services.

Harvest data#

Before harvesting the data, you need to register a catalog in the piveau hub.

Execute the following command to register the GovData catalogue.

curl -i -X PUT -H "X-API-Key: yourRepoApiKey" -H "Content-Type: text/turtle" --data @govdata.ttl localhost:8081/catalogues/govdata

Execute the following command to register the data.gov.uk catalogue.

curl -i -X PUT -H "X-API-Key: yourRepoApiKey" -H "Content-Type: text/turtle" --data @data-gov-uk.ttl localhost:8081/catalogues/data-gov-uk

201 Created for each.

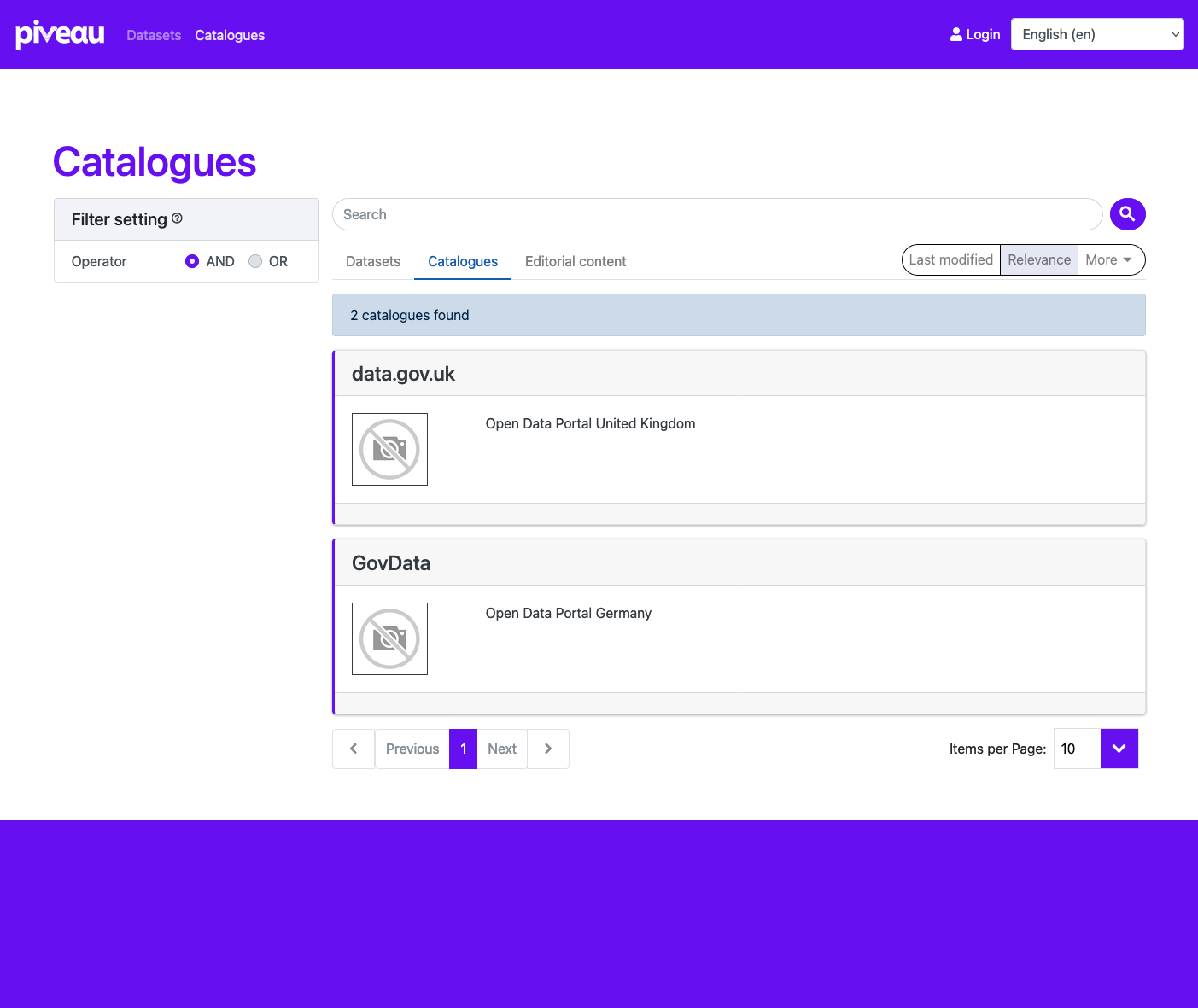

You can check if the catalogues were created at http://localhost:8080/catalogues?locale=en. You should see noth both catalogues.

You can start the harvesting process for GovData by choosing one of the following two options:

- Use the CLI interface of the scheduler either via

telnet localhost 5000(5001 for macOS) orhttp://localhost:8095/shell.htmland launch thegovdatapipe there:launch govdata - Or create an immediate trigger using the scheduler API:

Here is the same procedure for data.gov.uk:

- Use the CLI interface of the scheduler either via

telnet localhost 5000(5001 for macOS) orhttp://localhost:8095/shell.htmland launch thedata-gov-ukpipe there:launch data-gov-uk - Or create an immediate trigger using the scheduler API:

Important

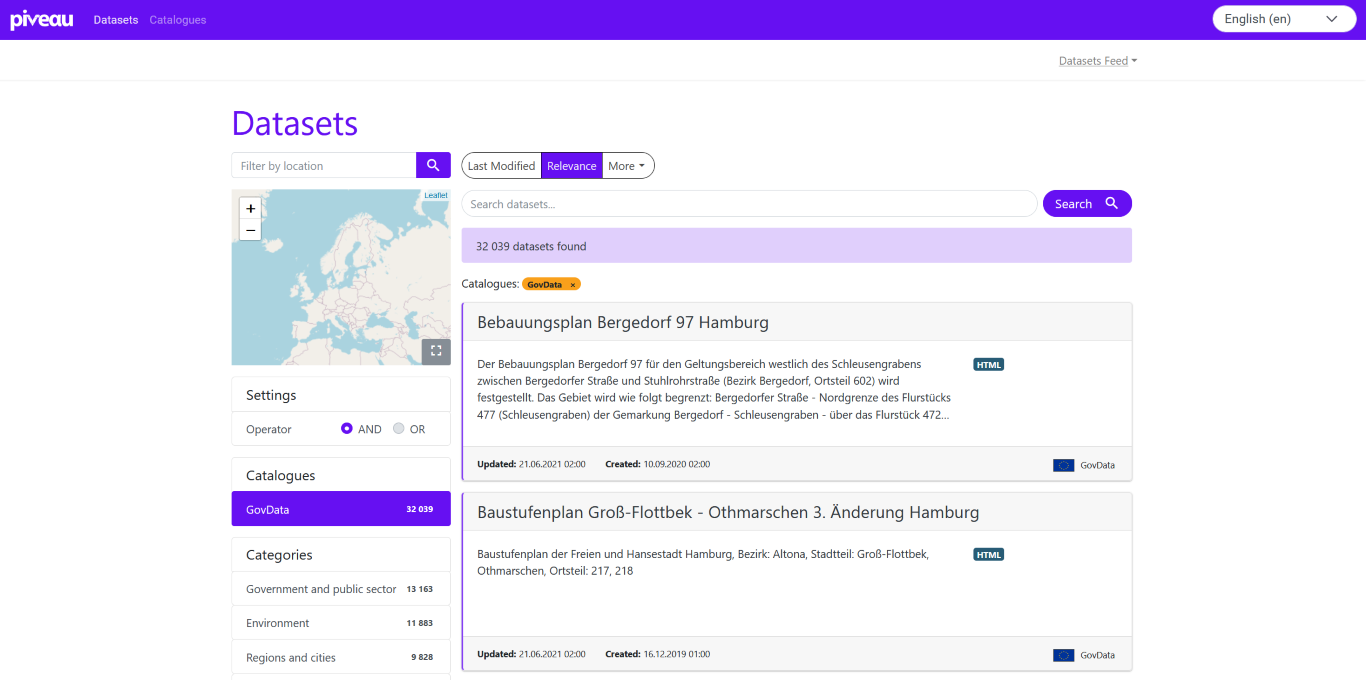

GovData and data.gov.uk consists of several thousand datasets. The harvesting process can take quite some time.

That's it!#

You can now start using your new basic piveau instance. Start browsing datasets here: http://localhost:8080

Build & run from source#

You need the following piveau services for this guide:

- piveau-hub-repo

- piveau-hub-search

- piveau-hub-ui

- piveau-consus-scheduling

- piveau-consus-exporting-hub

- piveau-consus-transforming-js

- piveau-consus-importing-ckan

- piveau-consus-importing-rdf

You find the sources for the Hub here and for Consus here. You also need two Docker Compose files, which you can download here. In addition, some images from the public Docker Hub will be pulled, like Elasticsearch.

Prerequisites#

- Java JDK >= 17

- Maven >= 3.6

- Node >= 10 <= v14

- npm >= 5.6.0 <= 6.14.16

- Docker & Docker Compose

- Git

- curl

Directory structure#

Place the sources and Docker Compose files in the following directory structure:

.

├─ piveau-hub-repo

├─ piveau-hub-search

├─ piveau-hub-ui

├─ piveau-consus-scheduling

├─ piveau-consus-exporting-hub

├─ piveau-consus-transforming-js

├─ piveau-consus-importing-ckan

├─ piveau-consus-importing-rdf

├─ docker-compose-consus-build.yml

└─ docker-compose-hub-build.yml

Important

On Windows: Make sure that you are using LF line separators!

Piveau Hub - The Data Storage#

Compile#

Open a shell and execute the following commands:

mvn -f piveau-hub-repo/pom.xml clean package

mvn -f piveau-hub-search/pom.xml clean package

cd piveau-hub-ui && npm install

cp config/user-config.sample.js config/user-config.js && npm run build

cd ..

Build and run images#

Build and start everything with Docker Compose:

This may take a couple of minutes. Upon completion, you can check if all services are running, e.g piveau-hup-repo at http://localhost:8081

Piveau Consus - Get some data with a harvesting run#

Compile#

Download the pipe specification for the German Open Data portal GovData.

The scheduling component requires it. Open a shell and execute the following commands:

mvn -f piveau-consus-importing-rdf/pom.xml clean package -DskipTests

mvn -f piveau-consus-importing-ckan/pom.xml clean package -DskipTests

mvn -f piveau-consus-transforming-js/pom.xml clean package -DskipTests

mvn -f piveau-consus-exporting-hub/pom.xml clean package -DskipTests

cp pipe-govdata.yaml piveau-consus-scheduling/src/main/resources/pipes

mvn -f piveau-consus-scheduling/pom.xml clean package -DskipTests

Build and run images#

Build and start everything with Docker Compose:

This may take a couple of minutes. Upon completion, you can check if all services are running, e.g piveau-importing-rdf at http://localhost:8090

Using Helm Charts#

It is also possible to use helm charts to deploy piveau components or modules to a kubernetes cluster.

Paca Repository#

All piveau helm charts are available through a publicly available repository. To add it to you repo list:

List all currently available helm charts in the paca repository:

piveau Modules and Components#

Beside the three main modules consus, hub, and metrics, each single component has a helm chart.

When you are already properly connected to your cluster (including namespace), installing piveau consus just requires the following command:

See README for more detailed information on how to use your own value file to individualize your deployment.